Step-by-Step Guide to Boosting Enterprise RAG Accuracy

In my previous blog I wrote about how semantic chunking with newer models like Gemini Flash 2.0 with very large context sizes can significantly improve the overall retrieval accuracy from unstructured data, like pdfs.

While exploring that, I started looking at other strategies that could further improve the accuracy of the responses given that in most large enterprises the tolerance for inaccuracies is and should be near zero. I ended up experimenting with a number of different things in this pursuit and in this blog let’s look at the overall steps that finally helped boost accuracy.

Before we get into the steps though, let’s look at the overall process from a slightly higher level and understand that in order to get more accurate results we have to do two things significantly better:

- Extraction — Given a set of documents, extract both data and knowledge in a way that is inherently organized for a better and more accurate retrieval.

- Retrieval — When a query comes in, look at some pre and post retrieval steps and contextualize better with “knowledge” for improved results.

Now, let’s look at the specific steps reflecting the current state of the project. I have put in some pseudo code in each step to keep the overall article more digestible for comprehension. For those looking for code level specifics you can check out my Github repo where I have posted the code.

1. Extracting Knowledge from PDFs

The Flow

When a PDF enters the system, a few things need to happen: it gets stored, processed, chunked, embedded, and enriched with structured knowledge. Here’s how it all plays out:

Step 1: Upload & Record Creation

- A user uploads a PDF (other file types like audio and video files coming soon).

- The system saves the file to disk (in the near future I will move this to an AWS S3 bucket to serve enterprise use cases better).

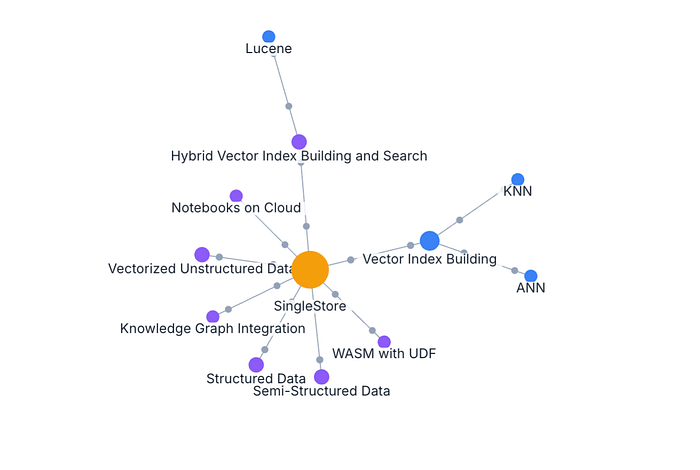

- A record is inserted into the database, and a processing status entry is created. For the database, I use SingleStore since it supports multiple datatypes and hybrid search and single shot retrievals).

- A background job is queued to handle the PDF asynchronously. This took me down a rabbit hole given how long it takes for the overall steps and ultimately settled with Redis and Celery for job processing and tracking. This did become a little painful for deployment but we can get into that later.

# Pseudo-code

save_file_to_disk(pdf)

db_insert(document_record, status=”started”)

queue_processing_task(pdf)Step 2: Parse & Chunk PDF

- The file is opened and validated for size limits or password protection, the reason being we want to fail the process early on if the file is not readable.

- The content is extracted into text/markdown. This is another big topic. I was earlier using PyMudf for overall extraction but later I discovered Llamaparse from Llamaindex and switching over made my life significantly easier. The free version of Llamaparse allows 1000 document parsing in a day and has a number of bells and whistles to get back responses in different formats and better extraction for tables, images from the pdfs.

- The document structure is analyzed (e.g., table of contents, headings, etc.).

- The text is split into meaningful chunks using a semantic approach. This is where I use Gemini Flash 2.0 given that it’s huge context size and significantly lower pricing.

- If semantic chunking fails, the system falls back to simpler segmentation.

- Overlaps are added between chunks to maintain context.

# Pseudo-code

validate_pdf(pdf)

text = extract_text(pdf)

chunks = semantic_chunking(text) or fallback_chunking(text)

add_overlaps(chunks)Step 3: Generate Embeddings

- Each chunk is transformed into a high-dimensional vector using an embedding model. I use 1536 dimensions given that I used the large ada model from OpenAI

- Next, both the chunk and its embedding are stored in the database. In SingleStore, we store the chunk and the text in the same table in two different columns for easy maintainability and retrieval.

# Pseudo-code

for chunk in chunks:

vector = generate_embedding(chunk.text)

db_insert(embedding_record, vector)Step 4: Extract Entities & Relationships using LLMs

- This is one of those things that has a high impact on the overall accuracy. As part of this step, I send the semantically organized chunks to OpenAI and with a little bit of specific prompting I ask it to return entities and relationships from each chunk. The result includes key entities (names, types, descriptions, aliases).

- Relationships between entities are mapped out. Here, if we find multiple entities, we update the category each time with enriched data instead of adding duplicates.

- The extracted “knowledge” is now stored in structured tables.

# Pseudo-code

for chunk in chunks:

entities, relationships = extract_knowledge(chunk.text)

db_insert(entities)

db_insert(relationships)Step 5: Final Processing Status

- If everything processes correctly, the status is updated to “completed.” This is so that the front end can keep polling and show the right status at any time.

- If something fails, the status is marked as “failed,” and any temporary data is cleaned up.

# Pseudo-code

if success:

update_status(“completed”)

else:

update_status(“failed”)

cleanup_partial_data()When these steps are completed, we now have semantic chunks, their corresponding embeddings and entities and relationships found in the document in tables that have references to each other.

We are now ready for the next step which is retrieval.

2. Retrieving Knowledge (RAG Pipeline)

The Flow

Now that the data is structured and stored, we need to retrieve it effectively when a user asks a question. The system processes the query, finds relevant information, and generates a response.

Step 1: User Query

- The user submits a query to the system.

# Pseudo-code

query = get_user_query()Step 2: Preprocess & Expand Query

- The system normalizes the query (removes punctuation, normalizes whitespace, expands with synonyms). Here again I use an LLM (Groq for faster processing)

# Pseudo-code

query = preprocess_query(query)

expanded_query = expand_query(query)Step 3: Embed Query & Search Vectors

- The query is embedded into a high-dimensional vector. I use the same ada model that I used earlier for extraction.

- The system searches for the closest matches in the document embedding database using semantic search. I use dot_product within SingleStore to do this.

# Pseudo-code

query_vector = generate_embedding(expanded_query)

top_chunks = vector_search(query_vector)Step 4: Full-Text Search

- A parallel full-text search is conducted to complement vector search. In SingleStore we do this with the use of MATCH statement.

# Pseudo-code

text_results = full_text_search(query)Step 5: Merge & Rank Results

- The vector and text search results are combined and re-ranked based on relevance. One of the configurations we can play around here is the top k results. I got a lot better results with top k = 10 or above.

- Low-confidence results are filtered out.

# Pseudo-code

merged_results = merge_and_rank(top_chunks, text_results)

filtered_results = filter_low_confidence(merged_results)Step 6: Retrieve Entities & Relationships

- Next, if entities and relationships exist for retrieved chunks, they are included in the response.

# Pseudo-code

for result in filtered_results:

entities, relationships = fetch_knowledge(result)

enrich_result(result, entities, relationships)Step 7: Generate Final Answer

- Now we take the overall context and with prompting we enhance the context and send the relevant data an LLM (I used gpt3o-mini) to generate the final response.

# Pseudo-code

final_answer = generate_llm_response(filtered_results)Step 8: Return Answer to User

- The system sends the response back as a structured JSON payload along with the original database search results to identify sources for further debugging and tweaking if needed..

# Pseudo-code

return_response(final_answer)Now, here is the kicker. Overall, the retrieval process was taking about 8 seconds for me which was not acceptable.

On tracing the calls I found out that the maximum response times were from LLM calls (about 1.5 to 2 second). The SingleStore database query consistently came back in 600 milliseconds or less. On switching to Groq for a few LLM calls, the overall response times dropped to 3.5 seconds. I think this can be further improved if we made a few parallel calls instead of serial but that is a project for another day.

Finally, the kicker.

Given that we are using SingleStore I wanted to see if we can do just one query to do the full retrieval so that not only it is easier to manage, update and improve in the future but also because I wanted even better response times from the database. The assumption here is that the LLM models will become better and faster in the near future and I have no control over those (you can of course deploy a local LLM in the same network if you are really that serious about latency today).

Finally, here is the code (single file for convenience) that now does a single shot retrieval query.

import os

import json

import mysql.connector

from openai import OpenAI

# Define database connection parameters (assumed from env vars)

DB_CONFIG = {

"host": os.getenv("SINGLESTORE_HOST", "localhost"),

"port": int(os.getenv("SINGLESTORE_PORT", "3306")),

"user": os.getenv("SINGLESTORE_USER", "root"),

"password": os.getenv("SINGLESTORE_PASSWORD", ""),

"database": os.getenv("SINGLESTORE_DATABASE", "knowledge_graph")

}

def get_query_embedding(query: str) -> list:

"""

Generate a 1536-dimensional embedding for the query using OpenAI embeddings API.

"""

client = OpenAI(api_key=os.getenv("OPENAI_API_KEY"))

response = client.embeddings.create(

model="text-embedding-ada-002",

input=query

)

return response.data[0].embedding # Extract embedding vector

def retrieve_rag_results(query: str) -> list:

"""

Execute the hybrid search SQL query in SingleStore and return the top-ranked results.

"""

conn = mysql.connector.connect(**DB_CONFIG)

cursor = conn.cursor(dictionary=True)

# Generate query embedding

query_embedding = get_query_embedding(query)

embedding_str = json.dumps(query_embedding) # Convert to JSON for SQL compatibility

# Set the query embedding session variable

cursor.execute("SET @qvec = %s", (embedding_str,))

# Hybrid Search SQL Query (same as provided earlier)

sql_query = """

SELECT

d.doc_id,

d.content,

(d.embedding <*> @qvec) AS vector_score,

MATCH(TABLE Document_Embeddings) AGAINST(%s) AS text_score,

(0.7 * (d.embedding <*> @qvec) + 0.3 * MATCH(TABLE Document_Embeddings) AGAINST(%s)) AS combined_score,

JSON_AGG(DISTINCT JSON_OBJECT(

'entity_id', e.entity_id,

'name', e.name,

'description', e.description,

'category', e.category

)) AS entities,

JSON_AGG(DISTINCT JSON_OBJECT(

'relationship_id', r.relationship_id,

'source_entity_id', r.source_entity_id,

'target_entity_id', r.target_entity_id,

'relation_type', r.relation_type

)) AS relationships

FROM Document_Embeddings d

LEFT JOIN Relationships r ON r.doc_id = d.doc_id

LEFT JOIN Entities e ON e.entity_id IN (r.source_entity_id, r.target_entity_id)

WHERE MATCH(TABLE Document_Embeddings) AGAINST(%s)

GROUP BY d.doc_id, d.content, d.embedding

ORDER BY combined_score DESC

LIMIT 10;

"""

# Execute the query

cursor.execute(sql_query, (query, query, query))

results = cursor.fetchall()

cursor.close()

conn.close()

return results # Return list of retrieved documents with entities and relationshipsLessons Learned

As you can imagine, it is one thing to do “naive” RAG with chat with your pdf and another to try and get the accuracy over 80% while keeping the latency low. Now throw in structured data into the mix and you have gotten yourself so deep into a project that this becomes a full time job 😅

I plan to continue tweaking and making improvements and blogging about this project and for a shorter term, here are some ideas I am looking to explore next.

Accuracy Enhancements

Extraction:

- Externalize and experiment with entity extraction prompts.

- Summarize chunks before processing. I have a feeling this may have a non-trivial effect.

- Add better retry mechanisms for failures in different steps.

Retrieval

- Use better query expansion techniques (custom dictionaries, industry-specific terms).

- Fine-tune weights for vector vs. text search (this is already externalized in config yaml file now)

- Add a second LLM pass for re-ranking top results (hesitant to try this given the latency trade off).

- Adjust retrieval window size to optimize recall vs. relevance.

- Generate chunk-level summaries instead of sending raw text to the LLM.

Wrapping It Up

In many ways, I am documenting this to remind myself what goes into building an enterprise RAG or KAG today keeping in mind the enterprise requirements. As a reader if you find some really naive things I am doing or have other ideas to improve me please feel free to reach out either here or on LinkedIn so that we can work on this together.

✌️